This is a Mastodon thread. The original thread is available here:

Intro

🚨 Preprint alert! 🚨

#Psychology #OpenScience #Measurement #Validity #RStats @rstats@gup.pe

Ever had trouble understanding exactly how to interpret a construct’s definition?

Ever wondered why different articles seem to have different definitions of the same construct?

Ever wanted a solution for this?

We address this issue and present a set of #OpenSource tools to deal with it!

🧵 1/15

Replication, measurement, and theory crises

The replication crisis (or credibility revolution) revealed an underlying “measurement crisis” and a “theory crisis”. One symptom of this theory crisis is the “jingle jangle jungle”, both at the conceptual level and in measurement 🤔

On the one hand, sometimes on closer inspection, constructs or measurement instruments that are purportedly different turn out to be practically identical, preventing accumulation of knowledge and hindering evidence syntheses.

🧵 2/15

On the other hand, sometimes measurement instruments supposedly measuring the same thing exhibit substantial differences in content, or in qualitative studies about the same construct, codebooks vary substantially. This means the findings from such studies cannot be compared or aggregated.

🧵 3/15

Construct Definitions

One cause of this is the exceptionally brief construct definitions used in psychology. Most construct definitions appear to be about 20 words or less - exceptionally concise for things that cannot be directly observed.

As a consequence, researchers using such concepts must first elaborate those definitions for themselves. Such elaborations, however, often remain hidden.

🧵 4/15

This heterogeneity in “study-level elaborated construct definitions” is in itself not bad. Epistemic diversity has a number of benefits, and studying multiple variations of construct definitions seems sensible.

However, it is a problem that this heterogeneity is hidden.

So: we want to avoid curation of construct definitions by a central authority, but simultaneously eliminate this hidden heterogeneity.

🧵 5/15

Decentralized Construct Taxonomies

Enter: Decentralized Construct Taxonomies 💫

The idea is simple; a way to openly share:

1️⃣ A unique construct identifier

2️⃣ A human-readable label

3️⃣ A comprehensive construct definition

4️⃣ Instructions for developing measurement instruments

5️⃣ Instructions for identifying/coding measurement instruments

6️⃣ Instructions for qualitative research

These DCT specifications can easily be shared, re-used, or adapted.

🧵 6/15

By combining the comprehensive construct definitions with corresponding instructions for using the construct in quantitative and/or qualitative research, there will hopefully be less need for individual researchers to “fill in the blanks” when they design a study.

In addition, DCT specifications can easily be adapted. This way everybody can redefine constructs if they want to - and through the unique identifiers it’s always clear exactly which construct definition is used.

🧵 7/15

Producing comprehensive construct definitions

We explain a number of ways to arrive at such comprehensive construct definitions, such as expert consensus, using the Delphi approach, using scoping reviews, and using qualitative and quantitative primary research.

One method is basically documenting the comprehensive, elaborated definitions your research team uses. No extra work, but less ambiguity and more transparency and openness!

🧵 8/15

The point is that to decrease the hidden heterogeneity, any effort to explicate construct definitions is helpful.

For example, in your research team, you could try the following.

Get together, take a construct you’re studying, and independently write down comprehensive definitions.

You’ll likely end up with different definitions. In some cases, the differences will be small; in others, larger.

🧵 9/15

Once you did this, you can start discussing these differences in “implicit elaborated construct definitions”. This will allow you to make sure you’re all on the same page regarding what exactly you’re studying.

Or, alternatively, you could design studies based on where you differ, which can then feed into further theory development.

In any case, this will help you to be more transparent: more Open Science!

🧵 10/15

Five tools for better construct definitions

We also discuss how to work with decentralized construct taxonomies in practice. First, we introduce five tools we developed:

1️⃣ A YAML standard for DCTs

2️⃣ The {psyverse} #rstats 📦

3️⃣ Unique Construct Identifiers

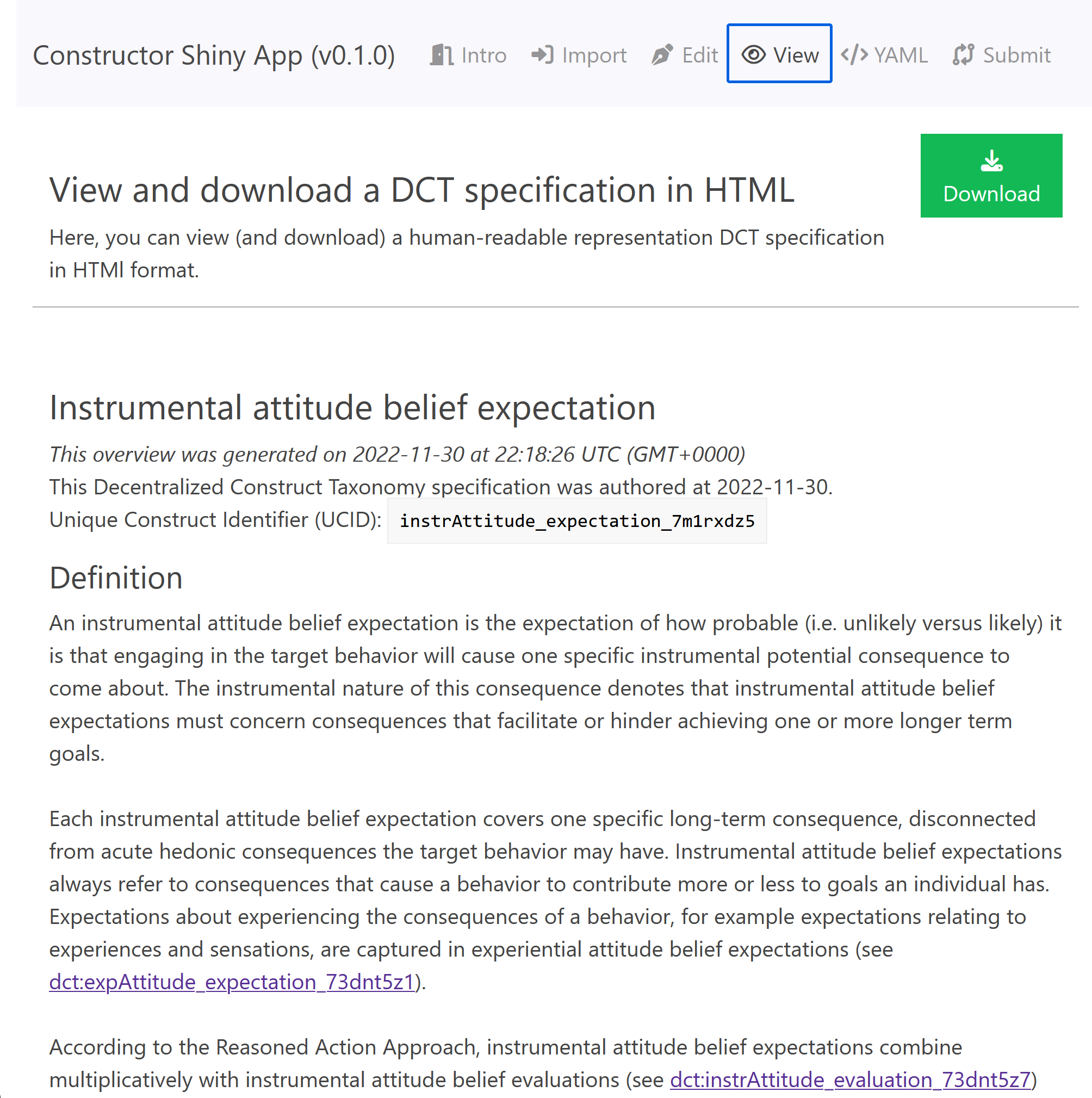

4️⃣ The Constructor Shiny App 🏗️

5️⃣ The open source Psychological Construct Repository, PsyCoRe.one

🧵 11/15

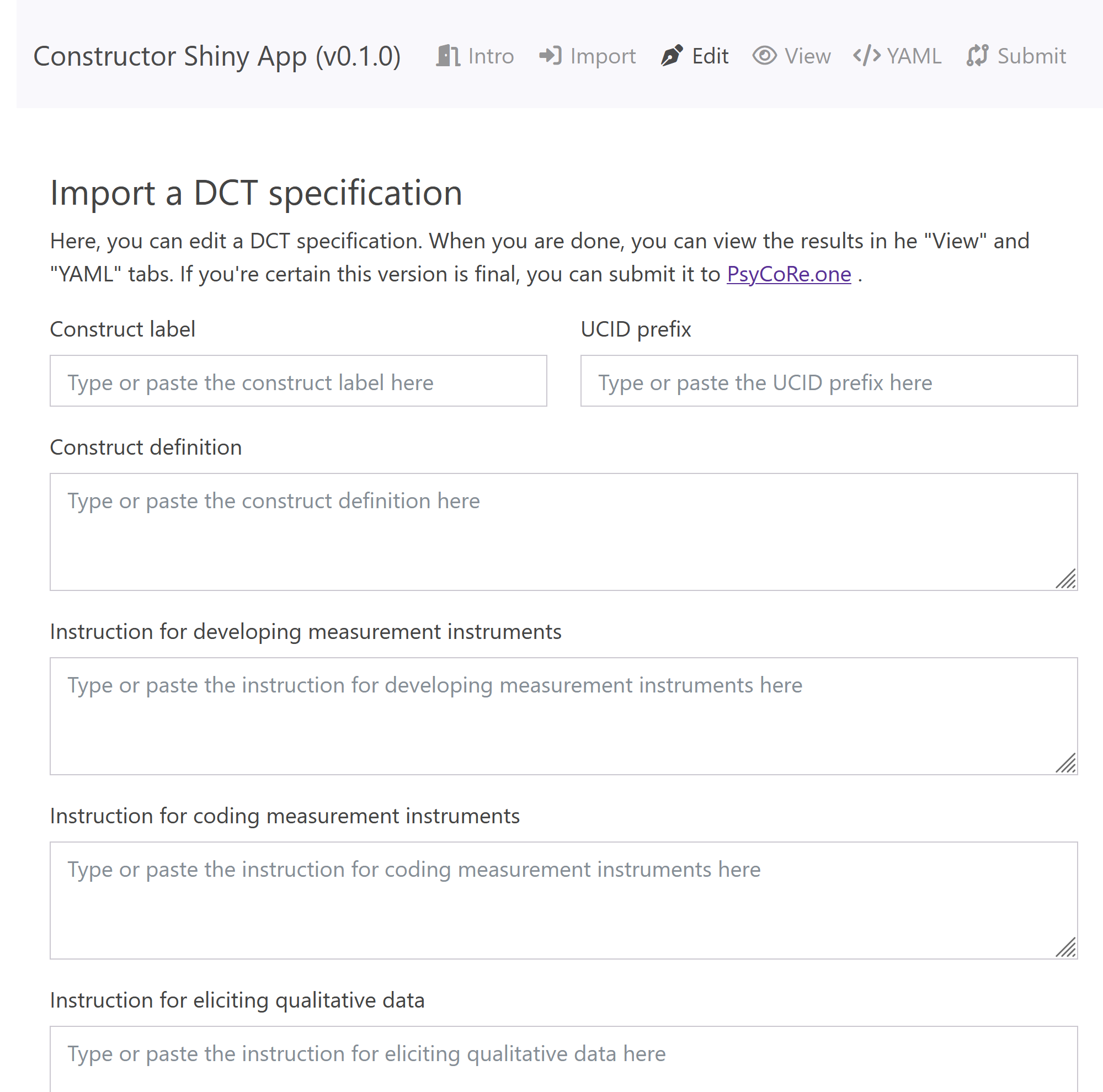

The Constructor Shiny app, for example (at https://psycore.one/constructor) allows you to:

1️⃣ Type in a DCT specification (a comprehensive construct definition and the corresponding instructions for using it);

2️⃣ Or import it from a spreadsheet (or a Google Sheets URL);

3️⃣ And then creates a Unique Construct Identifier;

4️⃣ Lets you download the .YAML file;

5️⃣ And/or lets you submit it to PsyCoRe.one.

🧵 12/15

How to use DCT specifications

Then, we discuss how to use DCT specifications in primary empirical research, both quantitative research and qualitative research; how to use DCT specifications in literature reviews; how to use them in theory development; how to use them in practice; and how to use them in large-scale collaborations.

🧵 13/15

What I really like about DCTs is that they’re so super-simple, almost trivial.

Mostly, they’re a concrete, tangible tool for better conceptual clarification - but a tool that also helps to share, re-use, and adapt.

They can help a lab to do more consistent research; in teaching, repositories can help students; and they can help knowledge translation towards practitioners.

🧵 14/15

Review along at Meta-Psychology

Last toot: we submitted this to Meta-Psychology. That means that everybody’s invited to peer review along!

Head over to https://doi.org/jnjp and you can annotate using https://hypothes.is 🤩

🧵 15/15